Blog

Winning the hand over complex data through multi-faceted methods

Wed. 02. Sep 2020 09:16

Complex projects like ImpleMentAll force complex and well thought-out solutions sometimes. During the first three ImpleMentAll years, we faced a number of challenges on the way to better and more effective implementation of iCBT. This blogpost aims to show you three examples.

Anne, ImpleMentAll partner from the GET.ON Institute (HelloBetter), states: “I am not sure if I can say there was one „major challenge“ in ImpleMentAll’s work-package on Implementation management and knowledge transfer in the beginning of the project – but rather many medium or minor ones. And they continue to change and evolve throughout the project.”

One challenge was the description of the different implementation sites, as both small and medium sized enterprises and public institutions are part of the data collection. A good description and analysis of the sites was needed in the beginning of the project in order to understand the sites’ needs and implementation goals - and to be able to interpret the study’s results at a later stage. “It was challenging that the examined sites differed so much from each other. For example, the services are located at different points within the local healthcare systems depending on where they are needed. Most of the implementation sites provide services in primary care, while others provide them in secondary care or sometimes even in both. Again, others are offering their service mainly in the prevention sector. While this is great, as we learn much about interventions in different contexts, it was a challenge to capture for the project”, says Anne.

Also, in multiple regions, the local government is directly involved in the implementation process. A few partners include healthcare insurance companies into their organisational setup, while other sites involve university structures in the implementation process. Experiences from other projects had shown the team that it is important to understand the implementation environments properly to benefit most from the collected data. Therefore, we had to find a solution for describing the different sites in one format.

To do that, implementation science offers a multitude of tools to describe contexts and interventions. Also, advice from the External Advisory Board brought up many valuable ideas. This special consideration resulted in an initial questionnaire, which retrieved information about the implementation sites regarding the structure of the iCBT service, the target group, the roots of entry into the service, the technical solutions and the implementation plans and goals. The questionnaire was inspired by several tools to capture all relevant facets.

This information was the basis for an initial description of the implementation sites answering these major questions: what service is implemented into which context (where), the process by which this is achieved (how), and what implementation activites were planned for the future. Read more in Deliverable 5.1 Implementation plans.

“Afterwards, we were continuously monitoring changes in the implementation sites, like adaptations to the iCBT services and changes on policy level”, says Anne. When the process evaluation started, more information on all relevant factors was gathered, and changes became visible in different aspects of the implementation sites over time. But how to include all this information?

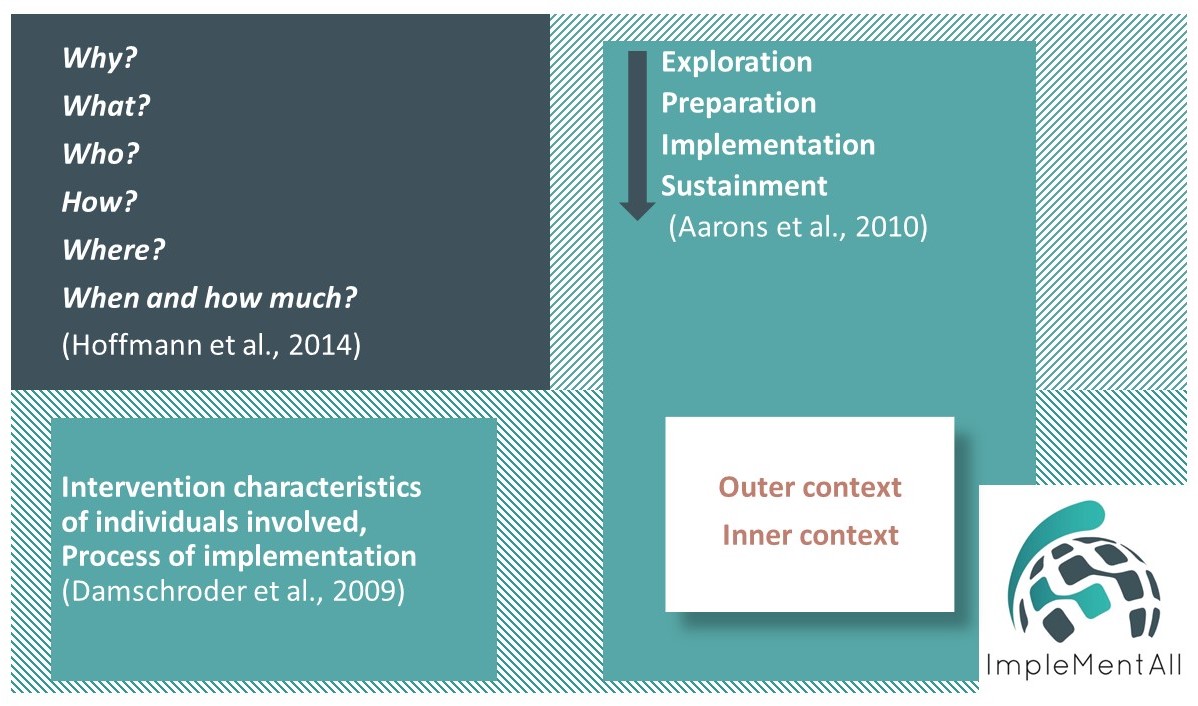

We decided to develop a matrix describing the services, involved parties, and context-related issues. For this purpose, three models were used:

- The TiDieR Checklist (Hoffmann et al., 2014)

- The Exploration, Preparation, Implementation, and Sustainment (EPIS) model (Aarons et al., 2010) and

- The Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009).

In combination, these models allow for a multi-faceted description of the complex systems of the implementation sites and their change over time.

What do you think are the most relevant contextual factors when implementing iCBT?